The Cold Email Masterclass

Lesson 7

Analyze, Optimize, and Scale

A good optimization campaign separates mediocre cold email efforts from those that become truly successful.

- Your goal with email analysis is to get the maximum possible benefit from your cold messages with the smallest possible amount of effort, resources, etc.

- By conducting optimization before scaling your campaign, you’ll be able to take your most effective messages to the largest possible audience, improving your overall results.

Of course, if email optimization was easy, everyone would be doing it. The gap between those using analysis effectively and those who are just “winging it” suggests that there’s still plenty of opportunity for most salespeople to improve their cold email results.

Doing so requires navigating the unique challenges associated with cold email testing, while at the same time implementing analytics best practices that aren’t always easy or intuitive.

The Challenge with Cold Email Testing

One of the fundamental building blocks of a cold email optimization campaign is the A/B test, in which two variations of an individual message are sent out in order to determine which one results in more conversions.

A/B testing is used frequently in the marketing world to compare the performance of landing pages and other web elements. In the case of cold emailing, testing different subject lines, opening lines, CTAs, button texts and other variables can help your future messages perform better by honing in on the combinations most likely to produce a response among prospects.

That said, one of the challenges associated with traditional A/B testing is that you need a sufficiently-high sample size to prove the statistical significance of your results.

Statistical significance is your ability to determine whether or not the results you’re seeing are due to random chance or to the variable you’re testing.

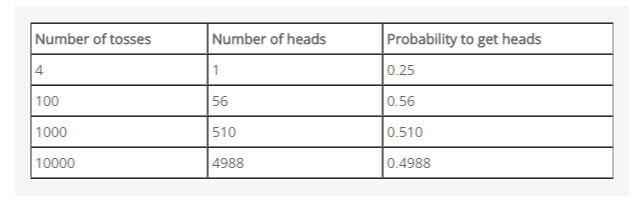

Imagine flipping a coin. With every flip, you have an equal probability of getting heads or tails. However, if you only flip your coin a few times, you could get a skewed sense of probability compared to carrying the same experiment out thousands of times.

The chart below from Basic Mathematics shows the probability of getting heads over different numbers of tosses, as determined by a computer simulation: (image source: Basic Mathematics)

Now, imagine that, instead of coin tosses, you’re testing cold email responses.

- If you send out four messages and get one response, you might determine that your odds of getting a response using that variation will always be 25%.

- However, because you’re testing such a small sample, you can’t have the same confidence in your results you’d have if you sent out 100, 1,000 or even 10,000 messages.

That doesn’t mean that A/B testing isn’t useful when sending cold emails. You can still gain useful insight from split testing, though it’s important you understand how to collect it effectively and how to make informed decisions based on your results.

How to Optimize Your Cold Emails: A General Process

Since classic A/B split testing measures don’t apply neatly to cold emailing, think of your general goal as this: to send small batches of messages in order to see what works before scaling to larger audiences. Basically, send your message to 100 people and work out the kinks before sending it to 5,000.

Here’s how to do it:

Step #1: Set Your Baseline Metrics

Set a baseline for your optimization efforts, depending on the specific type of conversion you intend to track. We touched on this back in Lesson #1, but generally, this means tracking open rates, click-through rates or response rates.

There are two ways you can set your baseline metrics:

- Base them off of industry standards

- Base them off your current numbers

If you’ve been tracking your target metric, you may already have a baseline in mind. For instance, if you’ve been seeing a response rate of less than 1%, that’s a great starting point for your future optimization efforts.

If you don’t know where you currently stand, you might find it easier to set your baseline at industry averages (if they exist in your field). Find a starting point by searching the web using queries like:

- “Average cold email open rate in [industry]”

- “Cold email response rate in [industry]”

- “Good cold email response rate”

Leadfuze, for example, reports that the average cold email response rate is less than 1%. A question posted to Startups.co suggests an average conversion rate of 0.5%.

Yet again, I’m going to caution you not to overthink this.

Pick a number that feels right based on what you know of your own cold email performance and average standards in your industry. You’ll revise it anyways after your first test gives you real data to work with..

Step #2: Set Goals for Your Target Metrics

Next, you need a goal to work towards. For example, you might aim to improve open rates by 10% or your response rates by 5%.

Pick a number that represents a modest improvement over your baseline. As you run different tests, you can make future goals more aggressive or dial back your expectations if you aren’t seeing the desired results.

Step #3: Design and Run Your Experiments

When it comes to test design, you have two options: testing large, sweeping changes vs. making smaller tweaks.

For example, say your goal is to increase your open rates by a huge margin. In this case:

- Testing your CTA won’t be useful, since prospects don’t interact with it until they’ve decided to open your message

- Changing a single word in your subject line might make a small difference, but testing an entirely new structure could get you bigger results.

Design the experiments that are most likely to get you to your stated goals. Need big improvements? Test big changes. Need to refine an already-successful message? Think smaller.

It’s also an A/B split testing best practice to test a single variable at a time. If you tweak your subject line, your greeting, your CTA and the inclusion of a P.S. all at once, how will you know which variables contributed to the change you’re seeing?

If you aren’t sure which single variable to test first, use the questions below for ideas.

- Questions to Increase Open Rates

- Are my cold emails being delivered in the first place?

- Am I targeting the right audience?

- Can my subject line be improved? You can:

- Test the different variations you developed back in Lesson #2

- Test different formats (e.g. short vs. long, question vs. statement, etc.)

- Test including or leaving out personalization fields

- Test specificity vs. general subject lines.

- Am I sending at the right time?

- Questions to Increase Click-Through Rates

- Am I using hyperlinked text or a raw URL?

- Would using a linked image produce better results?

- How am I framing the benefits of clicking my link?

- Am I sending prospects to an appropriate landing page for their needs?

- Questions to Increase Response Rates

- Should I use a different greeting?

- Can my opening line be improved?

- Will a different template give me better results?

- Is my email too long?

- Is my tone off?

- Has my copy inadvertently offended my prospects?

- Is my CTA ask too big?

- Have I clearly conveyed the benefits of my product or service?

- Could I share a different piece of helpful content?

- Would a post-script be helpful?

- Am I following up an appropriate number of times?

This isn’t a comprehensive list of possible testing factors. Instead, use these questions to get inspired about all the different opportunities for improving your cold email performance.

Step #4: Run Your Test

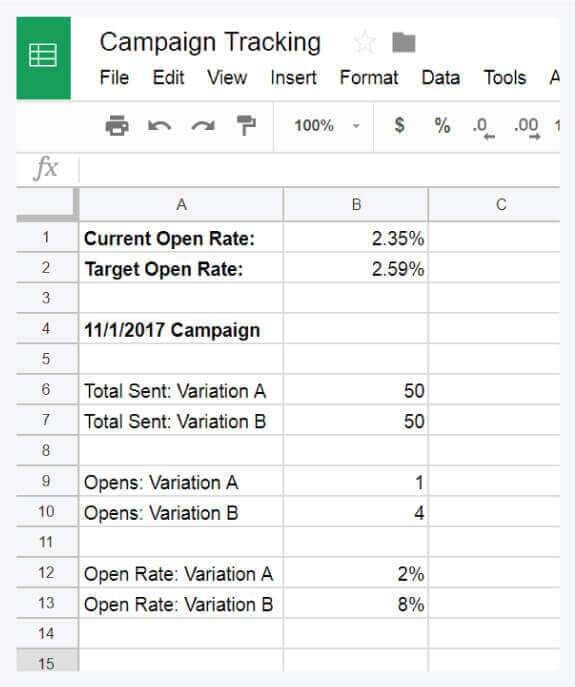

Let’s put all this into practice. Assuming you believe weak subject lines are causing your low open rates – and that you want to increase open rates by 10% – you could:

- Come up with one short subject line variation and one longer subject line

- Send 50 cold emails using the short subject line and 50 cold emails using the longer alternative

- After a few days, measure the number of short subject line emails that were opened against the number of long subject line emails that were opened

Use your email provider’s built-in A/B testing technology, or record your results in a simple spreadsheet.

In the case above, variation B met the threshold for increasing open rates by at least 10% (as 8% is higher than the target 2.59%). Variation A did not.

This kind of test can’t be considered conclusive. It’s not statistically significant, so you can’t be sure the results you’re seeing aren’t due to random chance.

But it does give you one data point to add to your campaigns to inform future tests. Run enough experiments like these, and general patterns will emerge that show you the best cold email approach for your audience.

One opportunity to expand on the data you collect is to actually call up prospects who don’t respond to your cold email. Steli Efti uses this process and describes it this way:

“Take a sample of the emails you send, and follow up with a phone call. Your objective in the call isn’t to make a sale, though it could lead to that. Instead, you want to find out what worked in your emails and what didn’t. You’re gathering intel.”

In his close.io blog post on the subject, he goes on to explain:

“When you start with a sample of 50 emails a day, it’s an opportunity to drill into the qualitative side and find out what connects to people on an emotional level. By making the call, you can gain valuable insights to your sales strategy and business that are unavailable to even the largest of data sets.”

If you aren’t sure why you’re getting the cold email A/B test results you’re seeing, this can be an especially valuable approach.

Step #5: Set Your Next Test

Once your test has concluded, start the next one up.

You might decide to repeat the test if you have a feeling your results were more due to chance than the changes you made. Or, you might decide to test something different if you’re reasonably confident in your results.

In any case, keep this rule in mind: always be testing. Always be working towards a goal. It’s only through this kind of consistent commitment to optimization that you’ll get the best possible results out of your cold email campaigns.